Difference between revisions of "Basis and coordinates"

(Done initial version) |

m (Still need to proof read.) |

||

| Line 49: | Line 49: | ||

If you multiply these matrices (which I simply cannot be bothered to write) you will get the identity matrix, that is <math>([L]_S^{S'}[K]_{S'}^S)(x,y)=(x,y)</math> | If you multiply these matrices (which I simply cannot be bothered to write) you will get the identity matrix, that is <math>([L]_S^{S'}[K]_{S'}^S)(x,y)=(x,y)</math> | ||

| − | + | ==Introducing a third person== | |

<center> | <center> | ||

{| class="wikitable" border="1" | {| class="wikitable" border="1" | ||

| Line 73: | Line 73: | ||

It is clear from the diagram that <math>(0,1)=(0,2)'=(1,1)''</math> | It is clear from the diagram that <math>(0,1)=(0,2)'=(1,1)''</math> | ||

| − | ===Computing the change of basis== | + | ===Interpreting the determinant=== |

| + | Notice the unit square in {{M|S}} - it's 4 of {{M|S'}}'s squares big in area, notice that <math>\text{Det}(\begin{pmatrix}2&0\\0&2\end{pmatrix})=4</math>, this is indeed the geometric interpreteration of the determinant! | ||

| + | |||

| + | Using {{M|\ell^2_\text{Space} }} to denote unit length in Space we see that | ||

| + | |||

| + | <math>1\ell^2_S=4\ell^2_{S'}=2\ell_C^2</math> | ||

| + | |||

| + | Visually there are 4 half squares in the unit square in {{M|C}}, which is 2 full squares (keep in mind one square on my paper is one square in {{M|S'}}) so yes, 4 full squares = 1 unit square in {{M|S}} = is 2 unit squares in {{M|C}} | ||

| + | |||

| + | This can take a while to get used to but is really quite a nice and easy thing to see | ||

| + | |||

| + | ====Inverse==== | ||

| + | If <math>1\ell_S^2=4\ell^2_{S'}</math> then surely we have <math>1\ell^2_{S'}=\frac{1}{4}\ell^2_{S}</math> and indeed we do, this is the geometric interpretation of: <math>\text{Det}(A^{-1})=\frac{1}{\text{Det}(A)}</math> and the core of the theorem that: | ||

| + | *A matrix is invertible ''if and only if'' it has non-zero determinant | ||

| + | |||

| + | ===Computing the change of basis=== | ||

So using <math>\begin{pmatrix}a&\times \\ b&\times\end{pmatrix}\begin{pmatrix}1\\0\end{pmatrix}=\begin{pmatrix}a\\b\end{pmatrix}</math> we wish to find a map | So using <math>\begin{pmatrix}a&\times \\ b&\times\end{pmatrix}\begin{pmatrix}1\\0\end{pmatrix}=\begin{pmatrix}a\\b\end{pmatrix}</math> we wish to find a map | ||

* <math>[G]_S^C</math> | * <math>[G]_S^C</math> | ||

| Line 79: | Line 94: | ||

Either of these will do. | Either of these will do. | ||

| − | It is clear that <math>(0,1)\mapsto(1,1)</math> and <math>(1,0)\mapsto(1,-1)</math> | + | It is clear that <math>(0,1)\mapsto(1,1)</math> and <math>(1,0)\mapsto(1,-1)</math> so we quickly get the matrix <math>[G]_S^C=\begin{pmatrix}1&1\\-1&1\end{pmatrix}</math> we can of course compose this just like before, for example <math>[K]_{S'}^S[G]_S^C:S'\rightarrow C</math> this takes a point in <math>S'\mapsto S\mapsto C</math> |

| + | |||

| + | ==Notation== | ||

| + | In all cases above we actually had maps on <math>\mathbb{R}^2</math>, for example <math>S</math> was just <math>\mathbb{R}^2</math> with a certain basis. This means we should have really written <math>L:\mathbb{R}^2\rightarrow\mathbb{R}^2</math> not <math>L:S\rightarrow S'</math> however this is less descriptive. | ||

| + | |||

| + | For two basis sets {{M|A}} and {{M|B}} do not be afraid to write <math>L:\mathbb{R}^2_A\rightarrow\mathbb{R}^2_B</math> but don't be eager to, notice that our three functions, {{M|L,K}} and {{M|G}} don't actualy do anything, they map a point to the same point, just expressed in a different basis. | ||

| + | |||

| + | This is where the <math>[\cdot]^B_A</math> notation comes in handy. | ||

| + | |||

| + | ===L is actually the identity=== | ||

| + | Yes that function <math>L(x,y)\mapsto(2x,2y)'</math> is actually <math>[Id]_S^{S'}</math> this suggests that any invertible linear map is actually just a change of basis betwee two things. Furthermore that the columns of the matrix are what each basis element is taken to. As stated above with <math>\begin{pmatrix}a&\times \\ b&\times\end{pmatrix}\begin{pmatrix}1\\0\end{pmatrix}=\begin{pmatrix}a\\b\end{pmatrix}</math> | ||

| + | |||

| + | ===So why is there room for a letter in <math>[\cdot]_A^B</math>=== | ||

| + | Well although it is true that every invertible linear transform is a change of basis from one basis to ''some'' other - we don't have to care what it is. For suppose we wish to scale all points by 3, this has the matrix <math>\begin{pmatrix}3&0 \\ 0&3\end{pmatrix}</math>, we will call this transform <math>S_3</math> for "scale-3". | ||

| + | |||

| + | Then: | ||

| + | * <math>S_3:\mathbb{R}^2\rightarrow\mathbb{R}^2</math> | ||

| + | * <math>[S_3]_E^E=\begin{pmatrix}3&0\\0&3\end{pmatrix}</math> where {{M|E}} is some basis on {{M|\mathbb{R}^2}} - and it is certainly not the identity. | ||

| + | ''Note'' <math>[\cdot]_B</math> is simply short hand for <math>[\cdot]_B^B</math> | ||

{{Definition|Linear Algebra}} | {{Definition|Linear Algebra}} | ||

Revision as of 16:35, 8 March 2015

This is very much a "motivation" page and a discussion of the topic.

See Change of basis matrix for the more maths aspect.

What is a coordinate

Suppose we have a Basis, a finite one, [math]\{b_1,...,b_n\}[/math], a point [ilmath]p[/ilmath] is given by [math]\sum^n_{k=1}a_ib_i[/math] and it is said to have coordinates [math](a_1,...,a_n)[/math] - because the basis set must be linearly independent, there is only one such [math](a_1,...,a_n)[/math] - hence "coordinate"

First example

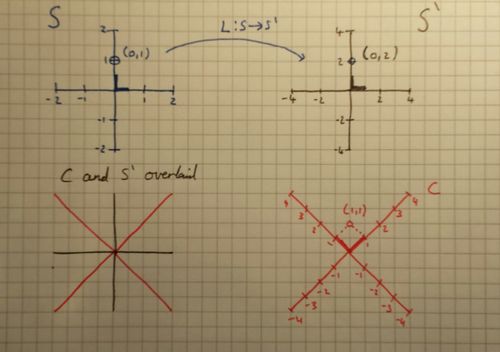

Let us suppose we have two people working on squared paper, one of them is working on nice normal squared paper, the other one has found squared paper with squares half the length.

Immediately one sees that the point [ilmath](0,1)[/ilmath] in our space is [ilmath](0,2)[/ilmath] in theirs.

We will call their space "prime" space, so the coordinate [ilmath](x,y)[/ilmath] is on our paper, and [ilmath](x,y)'[/ilmath] or [ilmath](x',y')[/ilmath] is on their paper.

It is immediately obvious that [math](x,y)=(2x,2y)'[/math] but is this a linear transform? Well recall to be linear [math]T(ax+by)=aT(x)+bT(y)[/math]

Let us try it [math](ax_1+bx_2,ay_1+by_1)=(2ax_1+2bx_2,2ay_1+2by_2)'=a(2x_1,2y_1)'+b(2x_2,2y_2)'[/math]

This looks linear. So what is the change of basis matrix? Well without knowing that we can see quite obviously that:

[math]\begin{pmatrix}2 & 0 \\ 0 & 2\end{pmatrix}\begin{pmatrix}x\\y\end{pmatrix}=\begin{pmatrix}2x\\2y\end{pmatrix}[/math]

Formal definitions

- Let [math]S[/math] be our squared paper

- Let [math]S'[/math] be their squared paper

- Let [math]L:S\rightarrow S'[/math] be the transform that maps points on our paper to their paper.

Then the matrix form of L, denoted [math][L]_S^{S'}=\begin{pmatrix}2&0\\0&2\end{pmatrix}[/math]

Notice the notation [math][L]^\text{to}_\text{from}[/math]

Inverse

It is clear from simple thought that to go from [ilmath]S'[/ilmath] to [ilmath]S[/ilmath] the transform is simply [math](x,y)'=(\frac{1}{2}x,\frac{1}{2}y)[/math] and it is easy to show that this is linear. Let us call this map [ilmath]K[/ilmath] and define it as follows:

[math]K:S'\rightarrow S[/math] - then [math][K]_{S'}^S=\begin{pmatrix}\frac{1}{2}&0\\0&\frac{1}{2}\end{pmatrix}[/math]

This notation seems a little heavy and redundant (for example what would [math][K]_A^B[/math] mean? We will come to that later)

Combining things together

We now have the functions:

- [math]L:S\rightarrow S'[/math]

- [math]K:S'\rightarrow S[/math]

These are linear isomorpisms (they're bijective and linear) and just like functions we can compose them.

[math][L]_S^{S'}[K]_{S'}^S[/math] is the transform that takes a point in [ilmath]S[/ilmath] to [ilmath]S'[/ilmath] followed by another transform that takes that point in [ilmath]S'[/ilmath] to a point in [ilmath]S[/ilmath]

If you multiply these matrices (which I simply cannot be bothered to write) you will get the identity matrix, that is [math]([L]_S^{S'}[K]_{S'}^S)(x,y)=(x,y)[/math]

Introducing a third person

|

|---|

| What we have so far, including third person. There is an error within the [ilmath]S[/ilmath] space, the blue thick lines representing basis vectors should be double their depicted length |

The bold lines are the "basis" vectors, notice the unit square differs in all of these.

Rather than working out the transform from [ilmath]C[/ilmath] to [ilmath]S'[/ilmath] or whatever we can simply notice:

[math]\begin{pmatrix}a&\times \\ b&\times\end{pmatrix}\begin{pmatrix}1\\0\end{pmatrix}=\begin{pmatrix}a\\b\end{pmatrix}[/math]

Recall the definition of coordinate, [math]\begin{pmatrix}1\\0\end{pmatrix}[/math] is the point [math]b_1[/math] (where [ilmath]b_1[/ilmath] is the first basis vector)

So this transform takes the first basis vector in one space to [math]\begin{pmatrix}a\\b\end{pmatrix}[/math] in another.

We will use [math](x,y)''[/math]to denote a point in [ilmath]C[/ilmath]

Coordinates again

It is clear from the diagram that [math](0,1)=(0,2)'=(1,1)''[/math]

Interpreting the determinant

Notice the unit square in [ilmath]S[/ilmath] - it's 4 of [ilmath]S'[/ilmath]'s squares big in area, notice that [math]\text{Det}(\begin{pmatrix}2&0\\0&2\end{pmatrix})=4[/math], this is indeed the geometric interpreteration of the determinant!

Using [ilmath]\ell^2_\text{Space} [/ilmath] to denote unit length in Space we see that

[math]1\ell^2_S=4\ell^2_{S'}=2\ell_C^2[/math]

Visually there are 4 half squares in the unit square in [ilmath]C[/ilmath], which is 2 full squares (keep in mind one square on my paper is one square in [ilmath]S'[/ilmath]) so yes, 4 full squares = 1 unit square in [ilmath]S[/ilmath] = is 2 unit squares in [ilmath]C[/ilmath]

This can take a while to get used to but is really quite a nice and easy thing to see

Inverse

If [math]1\ell_S^2=4\ell^2_{S'}[/math] then surely we have [math]1\ell^2_{S'}=\frac{1}{4}\ell^2_{S}[/math] and indeed we do, this is the geometric interpretation of: [math]\text{Det}(A^{-1})=\frac{1}{\text{Det}(A)}[/math] and the core of the theorem that:

- A matrix is invertible if and only if it has non-zero determinant

Computing the change of basis

So using [math]\begin{pmatrix}a&\times \\ b&\times\end{pmatrix}\begin{pmatrix}1\\0\end{pmatrix}=\begin{pmatrix}a\\b\end{pmatrix}[/math] we wish to find a map

- [math][G]_S^C[/math]

- [math]G:S\rightarrow C[/math]

Either of these will do.

It is clear that [math](0,1)\mapsto(1,1)[/math] and [math](1,0)\mapsto(1,-1)[/math] so we quickly get the matrix [math][G]_S^C=\begin{pmatrix}1&1\\-1&1\end{pmatrix}[/math] we can of course compose this just like before, for example [math][K]_{S'}^S[G]_S^C:S'\rightarrow C[/math] this takes a point in [math]S'\mapsto S\mapsto C[/math]

Notation

In all cases above we actually had maps on [math]\mathbb{R}^2[/math], for example [math]S[/math] was just [math]\mathbb{R}^2[/math] with a certain basis. This means we should have really written [math]L:\mathbb{R}^2\rightarrow\mathbb{R}^2[/math] not [math]L:S\rightarrow S'[/math] however this is less descriptive.

For two basis sets [ilmath]A[/ilmath] and [ilmath]B[/ilmath] do not be afraid to write [math]L:\mathbb{R}^2_A\rightarrow\mathbb{R}^2_B[/math] but don't be eager to, notice that our three functions, [ilmath]L,K[/ilmath] and [ilmath]G[/ilmath] don't actualy do anything, they map a point to the same point, just expressed in a different basis.

This is where the [math][\cdot]^B_A[/math] notation comes in handy.

L is actually the identity

Yes that function [math]L(x,y)\mapsto(2x,2y)'[/math] is actually [math][Id]_S^{S'}[/math] this suggests that any invertible linear map is actually just a change of basis betwee two things. Furthermore that the columns of the matrix are what each basis element is taken to. As stated above with [math]\begin{pmatrix}a&\times \\ b&\times\end{pmatrix}\begin{pmatrix}1\\0\end{pmatrix}=\begin{pmatrix}a\\b\end{pmatrix}[/math]

So why is there room for a letter in [math][\cdot]_A^B[/math]

Well although it is true that every invertible linear transform is a change of basis from one basis to some other - we don't have to care what it is. For suppose we wish to scale all points by 3, this has the matrix [math]\begin{pmatrix}3&0 \\ 0&3\end{pmatrix}[/math], we will call this transform [math]S_3[/math] for "scale-3".

Then:

- [math]S_3:\mathbb{R}^2\rightarrow\mathbb{R}^2[/math]

- [math][S_3]_E^E=\begin{pmatrix}3&0\\0&3\end{pmatrix}[/math] where [ilmath]E[/ilmath] is some basis on [ilmath]\mathbb{R}^2[/ilmath] - and it is certainly not the identity.

Note [math][\cdot]_B[/math] is simply short hand for [math][\cdot]_B^B[/math]